DHH, the éminence grise of the Ruby on Rails world, took a swipe at the test-first cult with his provocative article "Testing like the TSA", saying in effect that 100% test coverage is mad, bad, crazy behaviour, worthless, and an overall affront to good taste and a crime against humanity. [I paraphrase.] Since we enforce 100% code coverage at all points through our development process, I want to explain how this does not necessarily make us time-wasting, genital-fondling idiots, how the needs of our business drive our quality strategy, and how this pays off for us.

At Sage our customers demand and deserve the best we can deliver. We are very quality focused because we build accounting solutions in which getting the right answer matters a great deal: perhaps some customers don't care about quality, but ours demonstrably do. Perhaps in some cases time-to-market is much more important than reliability or maintainability: it is a business decision, and there is no one-size-fits-all answer. However, if you're building for the future and want to avoid years of functional paralysis and a costly rewrite, building an application on a solid quality foundation makes a lot of economic sense.

Write less code

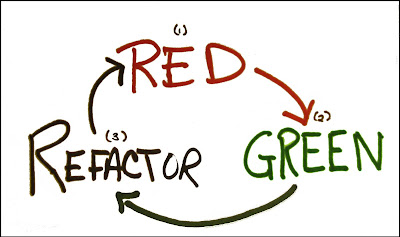

The most effective way to maintain 100% test coverage is by writing less code. We refactor like crazy, and we refactor our tests just as much as our code. We don't repeat ourselves. We spend time creating the right abstractions and evolving them. Having 100% test coverage makes it much easier for us to do this: it is a virtuous cycle.

We've been doing Rails development at Sage for five years now, and we've learned a few lessons. Even if you're writing unit tests with 100% code coverage, you're doing it wrong if:

- Generators are used to build untested code (i.e. using the default Rails scaffolds to build controllers and views)

- Partials are the most sophisticated method of generating views, and they look like PHP or ASP

- The tests are harder to understand than the code

What is the alternative? Well, if all of the controllers and views look pretty much the same, factor them out. The Rails generators create enormous amounts of crappy, unmaintainable boilerplate code – every bit as as much as a Visual Studio wizard. On the other hand, if the controllers and views are each completely different and unique flowers, is it for a good reason or is the code just a mess? Chances are, if the code looks like a mess, so does the app.

In my experience it's also basically useless to attempt to retrofit unit test code coverage onto a project that doesn't have it: the tests that wind up written are always written to pass, and they rarely help much. I haven't yet seen a project that could be rescued from this situation.

Whom do you trust?

When DHH says that the use of ActiveRecord associations, validations, and scopes (basic Rails infrastructure) shouldn't be tested, he's claiming that Rails is never wrong: not now, not in the future, not ever. It's his choice to make that promise, but it would be irresponsible of us to believe it:

- Rails changes all of the time. Sometimes there are even bugs! (Crazy talk, I know!) But active record associations and scopes are complex and ornery, and can easily be broken indirectly (through a change elsewhere in the code).

- Because we operate on the Internet, new security risks and fixes appear constantly: zero day attacks are real. We need to react to these threats quickly, and being able to prepare and deploy new versions of our apps based on updated components immediately is crucial. Having a robust test suite makes it much cheaper and less stressful to implement these changes, which drives down technical debt and makes development more responsive, and oh yeah, helps prevent a costly rewrite.

- We use components that extend and complement the behaviour of Rails. DHH calls out the example of testing validations to be particularly useless. Well, what about when the validations methods change in a rails upgrade? Or you want to adopt a new plugin that changes core Rails behaviour? Or you want to refactor an application to move validation to a more useful place? In all of those cases the tests on validation code would be useful.

Often this means a function in a spec mirroring a function in a model (but with enough difference in naming and syntax to be truly maddening). Yes, this feels stupid sometimes, but it is a very cheap insurance policy, and sometimes it pays off.

Time split

DHH says that you shouldn't be spending more than 1/3 of your time writing tests. This leads to a question: how are you characterizing your time? Is the person doing the implementation also the person making design decisions? If you are doing behaviour-driven development you are actually vetting the requirements at the time you write the tests, so is it a good idea to skip that part and move on to the coding? If you spend time refactoring tests to speed up the test process, should that be counted? Should the time spent writing tests before fixing bugs be counted? Have you decided to outsource quality to a bunch of manual testers? What is your deployment model? I'm reluctant to put a cap on the time writing tests. I find this metric as useful as dictating the time spent typing vs. reading, or the amount of time thinking vs. talking: my answer is not yours, and the end result is what matters.

Risk assessment

We enforce 100% test coverage because it ensures that no important line of code goes completely untested. One can decide to write tests for "important" code and ignore the "unimportant" code, but unfortunately a line of code only becomes "important" after it has failed and caused a major outage and data loss. Oops!

DHH avers that the criticality and likelihood of a mistake should be considered before deciding to write a test about something. However, this ignores the third criteria: cost. Is it cheaper to spend time deciding the criticality and likelihood of writing vs ignoring tests for every single line of code, or is cheaper to just write the stupid test and be done with it? Given the cost of doing a detailed long-term risk analysis on every line of code, does anybody ever really do it, or is the entire argument just an elaborate cop-out? The answer gets a lot clearer once you elect to write a lot less code, and it gets easier once you resign yourself to learning a new skill and changing your behaviour.

Closing

Code coverage is a great way to measure the amount of exposure you have to future changes, and depending on your business, it might be necessary to have 100% coverage. A highly respected figure speaking ex cathedra can be very wrong when it comes to the choices you need to make, and sometimes it shows. 100% code coverage may seem like an impossible goal, especially if you've never seen it done. I'm here to tell you it's not impossible: it's how we work, and in our case it makes a lot of sense.